How Netflix Is Building Recommendation Engine with LLM

For years, Netflix's personalization was powered by a complex ecosystem of specialized machine learning models. These models were brilliant at predicting what a member might click on in the next 30 seconds, but they seemed to lack a deeper understanding of a user's long-term taste. The system was optimized for the next interaction but struggled to grasp the long-term narrative of a member's evolving preferences. This wasn't a bug in the code; it was a fundamental limitation of the entire approach.

The platform's personalization relied on a constellation of models for different parts of the user experience, such as the "Continue Watching" row, "Top Picks for You," and similar title generators. This intricate system was immensely successful, collectively driving over 80% of all viewing hours on the platform. This impact translated into saving the company over $1 billion annually by keeping subscribers engaged and reducing churn.

But this success came at a cost. The system had become a sprawling collection of siloed models, making maintenance a monumental effort. Innovation in one area was difficult to transfer to others, leading to a point of diminishing returns where engineering complexity began to outweigh the incremental gains.

A significant breakthrough came from an entirely different field: Natural Language Processing (NLP). The paradigm shift towards massive, pre-trained Large Language Models (LLMs) was rewriting the rules of AI. Netflix's engineers observed how a single, large foundation model could perform a variety of tasks with minimal fine-tuning, demonstrating a generalized understanding that specialized models lacked. This led to a realization that they could borrow this playbook. The goal wasn't to use a chatbot to pick movies, but to build a single, unified foundation model that could learn the very language of member taste from the ground up. This strategic pivot marks a new chapter in the company's personalization journey, moving from shallow predictions to a deep, holistic comprehension of what truly delights each member.

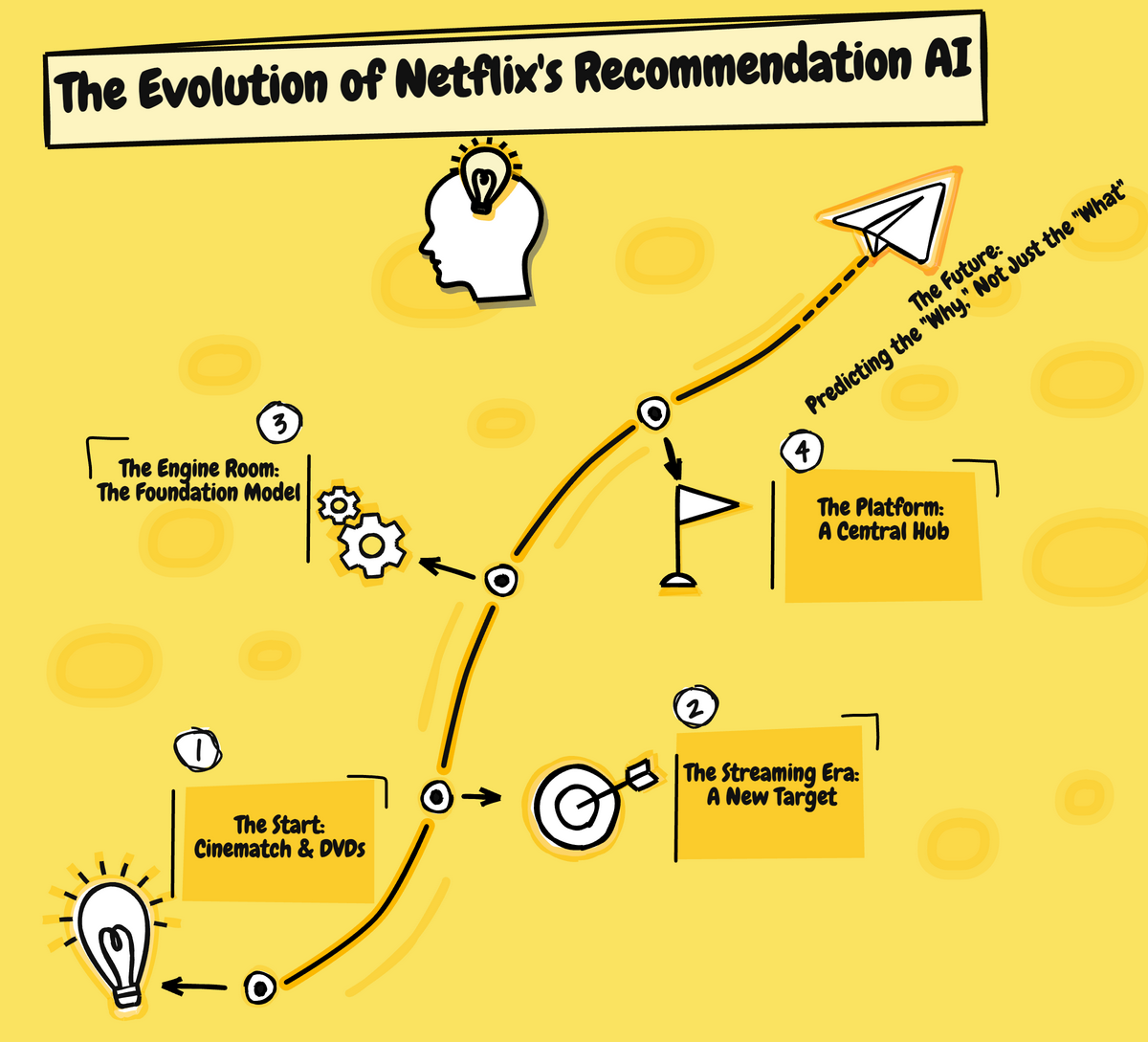

Charting the AI journey that powers your next binge-watch.

Part 1: A Brief History of Taste - From Cinematch to a Constellation of Models

The DVD Era & Cinematch

To understand Netflix's current trajectory, it's crucial to examine its origins. In the early 2000s, the business was about shipping DVDs in red envelopes, and the core challenge was helping members navigate a vast catalog. This was the birthplace of "Cinematch," the company's first-generation recommendation algorithm.

Cinematch was a classic collaborative filtering system that worked by analyzing the explicit 5-star ratings members gave to movies. The logic was simple but powerful: if two members rated a set of movies similarly, the system would recommend movies that one person loved but the other hadn't seen yet. The primary metric for success was Root Mean Square Error (RMSE), a statistical measure of how close the predicted ratings were to the members' actual ratings. For years, Netflix's data scientists and engineers worked to drive that RMSE "down and to the right".

The Netflix Prize and Its Legacy

The company's commitment to this problem was famously demonstrated in 2006 with the launch of the Netflix Prize. A massive anonymized dataset of member ratings was released, challenging the global research community to build an algorithm that could beat Cinematch's RMSE by 10% for a grand prize of $1 million.

The competition was a watershed moment for the field of machine learning, supercharging research into collaborative filtering and popularizing techniques like matrix factorization. While the winning solution from the "BellKor's Pragmatic Chaos" team was never fully implemented due to its engineering complexity, the prize had a profound impact. It cemented Netflix's reputation as a pioneer in applied machine learning and became a magnet for the world's top engineering talent.

The Streaming Era & The Rise of Implicit Signals

When the business pivoted to streaming, the nature of the available data changed completely. The system was no longer solely reliant on the sparse, explicit feedback of a 5-star rating. Instead, a torrent of rich, implicit signals poured in from every interaction: what users watched, how long they watched, when they paused, what they searched for, the time of day, and the device used.

This data was a goldmine. A member finishing a five-season series is a far stronger signal of enjoyment than a 5-star rating. The engineering challenge shifted from simply "predicting a rating" to the much more complex and valuable task of "predicting engagement" and, ultimately, member satisfaction and retention.

The Constellation of Models

This shift led to the development of the modern Netflix recommendation system: a complex federation of highly specialized models. Each part of the user interface was powered by different algorithms, each optimized for a specific purpose.The model that ranked titles in the "Trending Now" row had different constraints and objectives than the one that populated the "Because You Watched..." row.

This approach was incredibly successful and remains the engine that drives the vast majority of content discovery on the service. However, it created significant engineering pain points. The models were often confined to learning from a member's recent interaction history due to the strict millisecond-level latency requirements of the user interface.This led to a myopic view of member taste. Furthermore, the sheer number of models created a maintenance nightmare and made it difficult to propagate innovations across the entire ecosystem. A new technique developed by one team could take months to be adapted and adopted by others. The system was composed of beautifully handcrafted trees, but the view of the forest was being lost.

Part 2: The Foundation Model - Learning the Language of Member Taste

The Core Idea: Borrowing the LLM Playbook

The breakthrough for Netflix's engineers came from recognizing the parallels between language and member behavior. They began to conceptualize a member's entire interaction history—every play, search, rating, and browse—as a long, complex sentence, where individual interactions were the words. With this framing, the recommendation problem transformed into a familiar one from the world of LLMs: next-token prediction. The goal was to build a model that, given the "sentence" of a member's history, could predict the next "word" or interaction they were most likely to have.

This conceptual shift was profound. It allowed Netflix to leverage the power of self-supervised learning on its massive dataset of hundreds of billions of interactions, much like how models like GPT are trained on the vast corpus of the internet. This moved the company away from the laborious and often limiting process of manual feature engineering towards a more powerful, end-to-end, data-centric approach. The objective wasn't just to build a better predictor, but to build a model that could achieve a deep, contextual understanding of member behavior.

Deconstructing "Interaction Tokenization"

This is where the analogy to language models becomes technically challenging. In NLP, a token is typically a word or sub-word. At Netflix, however, an "interaction token" is far more complex. An interaction is a rich, multi-faceted event.

A process called interaction tokenization was developed. Each "token" is a heterogeneous data structure that packs in dozens of details. It includes attributes of the action itself (the member's locale, the time of day, the duration of the view, the device type) as well as detailed information about the content (the title ID, its genre, release country, and other metadata). Most of these features are directly embedded within the model, allowing for end-to-end learning.

The tokenization process itself is conceptually similar to Byte Pair Encoding (BPE), a common technique in NLP. Sequences of interactions are analyzed, and adjacent, meaningful actions are merged to form new, compressed, higher-level tokens. For example, multiple short browsing events followed by a play action might be merged into a single "browse-and-play" token. This presented a difficult engineering challenge, requiring a delicate balance: if the tokenization was too lossy, crucial signals would be lost; if it was too granular, the sequences would become too long for the models to process efficiently. It was a constant trade-off between the length of the interaction history and the level of detail retained in each token.

The Transformer Architecture at Netflix

With the data represented as sequences of these rich interaction tokens, the power of the transformer architecture could be brought to bear. The self-attention mechanism at the heart of transformers is exceptionally good at identifying long-range dependencies in sequential data, which was exactly what was needed to move beyond myopic, short-term predictions.

However, the context window limitation was an immediate hurdle. The most active members have interaction histories thousands of events long, far exceeding what a standard transformer can handle, especially given the sub-100ms latency budget for real-time recommendations. This was tackled with a two-pronged strategy:

During Training: Since feeding the entire history of every member into the model at once was not feasible, a sliding window sampling technique was used. For each member, overlapping windows of interactions were sampled from their full history. Over many training epochs, this technique ensures the model is exposed to the entirety of a member's sequence, allowing it to learn from both recent and long-past behaviors without requiring an impractically large context window. Sparse attention mechanisms were also employed to further extend the effective context window while maintaining computational efficiency.

During Inference: For real-time prediction, Key-Value (KV) caching was deployed. This technique allows the model to reuse the computations from previous steps in a sequence when generating the next prediction, drastically reducing latency and making the use of these large models feasible in a production environment.

Adapting the Objective Function

A simple next-token prediction objective, treating every interaction equally, was not sufficient. In the world of recommendations, not all signals are created equal. A member watching a two-hour film to completion is a much stronger indicator of satisfaction than them watching a five-minute trailer.

To align the model's objective with long-term member satisfaction, several key adaptations were made:

Weighted Prediction: A weighting scheme was introduced into the loss function. Interactions that signal higher engagement, like longer watch durations, are given a higher weight. This forces the model to prioritize learning from the most meaningful signals of member enjoyment.

Multi-Token Prediction: Instead of just predicting the very next interaction, a multi-token prediction objective was tested, where the model is tasked with predicting the next n interactions. This encourages the model to develop a longer-term perspective and avoid making myopic predictions that might satisfy an immediate need but fail to capture the broader trajectory of a member's taste.

Auxiliary Prediction Objectives: The interaction tokens are rich with metadata. This was leveraged by adding auxiliary prediction tasks to the model. For instance, in addition to predicting the next title ID, the model might also be tasked with predicting the next genre the member will interact with. This acts as a powerful form of regularization, preventing the model from overfitting on noisy title-level predictions and providing a deeper, more structured understanding of a member's underlying intent.

Part 3: The Hard Problems LLMs Don't Have to Solve

While drawing inspiration from the LLM playbook, applying these principles to the recommendation domain unearthed a unique set of challenges that required novel solutions—problems that are less common in the pure NLP world.

The "New Title" Problem (Entity Cold-Start)

Perhaps the most fundamental difference between the domains is the nature of the "vocabulary." An LLM like GPT-4 is trained on a massive but ultimately static vocabulary of words. Netflix's vocabulary, however, is anything but static. Every day, new movies, series, and games are added to the catalog.Each new title is a new "word" that the model has never seen before. Constantly retraining a model of this scale from scratch is computationally infeasible.

This is the classic entity cold-start problem, and a two-part solution was engineered directly into the foundation model's architecture :

Incremental Training: New models are never trained from a cold start. Instead, the training process is warm-started by reusing the learned parameters from the previous version of the model. Only new embeddings for the new titles added to the catalog need to be initialized. This approach dramatically reduces the computational cost and time required to keep the model up-to-date.

Metadata-Driven Embeddings: For a title on its launch day, before it has any interaction data, an initial embedding is generated by leveraging its rich metadata. The pre-existing, learned embeddings for its attributes—genres, actors, director, textual descriptions—are combined to form a composite, metadata-based embedding. The model then uses an attention mechanism based on the "age" of the title to dynamically blend this metadata-based embedding with the traditional ID-based embedding (which is learned purely from interactions). For a new title, the model leans heavily on its metadata. As it accumulates views, the model learns to rely more on its unique ID-based embedding. This was a game-changer, allowing for relevant recommendations for titles from the moment they launch.

The Unstable Universe of Embeddings

Another major operational challenge was the problem of embedding instability. Embeddings are at the heart of modern recommender systems—dense vector representations of members and titles. However, with each retraining of the foundation model, the entire geometric space of these embeddings would shift and rotate. The vector representing "Quentin Tarantino" in one model version might point in a completely different direction in the next, even if its relationship to other entities remained the same.

This created chaos for the dozens of downstream teams that consumed these embeddings as features for their own models, as their systems would break with each new release.

The solution was both mathematically elegant and practically transformative. After each training run, an orthogonal low-rank transformation is applied to the new embedding space. This process effectively aligns the new space with the coordinate system of the previous one, ensuring that the meaning of each dimension remains consistent over time. This provides downstream teams with a stable, unchanging map of member tastes, allowing them to build on the foundation model's work without fear of the ground shifting beneath them.

To make these distinctions clear, here is a breakdown of the key differences between a traditional LLM and Netflix's Recommendation Foundation Model:

Part 4: The Foundation Model in Action: An Engine for the Entire Business

From Model to Platform

The true power of the foundation model lies not just in its improved predictive accuracy, but in its role as a centralized piece of infrastructure for the entire company. This wasn't just about building a better "Top Picks" algorithm; it was about creating a foundational intelligence layer that could be leveraged in multiple ways, effectively transforming personalization from a series of product features into a scalable platform service.

The model was designed from the outset to be a versatile, multi-purpose engine with three primary modes of use:

Direct Predictive Model: In its most straightforward application, the model is trained to predict the next entity a user will interact with. It includes multiple "predictor heads" for different tasks, such as forecasting a member's preference for various genres. These predictions can be used directly to meet diverse business needs, like dynamically structuring the rows on the homepage.

Embeddings-as-a-Service: This has been a massive force multiplier for innovation at Netflix. The high-quality, stable embeddings for members and entities (videos, games, genres) are calculated in batch jobs and stored in a feature repository where they can be accessed by any team in the company. These embeddings now power a huge range of applications, from features in other models to candidate generation and title-to-title recommendations. Even content acquisition and marketing teams use these embeddings for offline analysis to better understand audience tastes.

Fine-Tuning: This approach democratizes access to state-of-the-art personalization. Any team at Netflix can take the pre-trained foundation model and fine-tune it on their own application-specific data.A team working on personalizing game recommendations, for example, can leverage its deep understanding of member preferences and fine-tune it to the specific nuances of the gaming domain. This allows them to achieve incredible performance with a fraction of the data and computational resources that would otherwise be required.

The Culture of A/B Testing

Of course, none of these innovations are rolled out based on a hunch or an offline metric. At Netflix, the ultimate arbiter of success is the member. Every proposed change is subjected to rigorous A/B testing.

The development of the foundation model was no exception. It was tested against the existing constellation of models in a series of large-scale online experiments involving millions of members. Its impact on key business metrics was meticulously measured: member engagement, session watch time, and, most importantly, long-term member retention. The model was only rolled out after it demonstrated statistically significant lifts in these metrics, proving that it was delivering a measurably better experience. This deeply ingrained, data-driven culture of experimentation is what gives Netflix the confidence to make massive, long-term investments in ambitious projects like this one, ensuring that engineering efforts are always directly aligned with member value.

Conclusion: The Future is a Conversation

The evolution of personalization at Netflix has been a journey from simple prediction to deep comprehension. The system started with Cinematch, which was excellent at predicting a static rating. It then moved to a constellation of models adept at understanding shallow, short-term engagement patterns. Now, with the foundation model, a system is being built that is beginning to achieve a deep, holistic understanding of the long-term narrative of a member's taste.

This new model is the first step towards a new frontier: predicting not just the what, but the why. It's about moving beyond "what will this member watch next?" to understanding their underlying intent. Is a member looking for a lighthearted comedy after a long day, or are they settling in for a weekend binge of a complex drama?.The model's ability to learn from long sequences of behavior is what unlocks this deeper, more contextual understanding.

The future of personalization at Netflix appears to be an ongoing, implicit conversation with each member. Every interaction is a piece of input that refines the model's understanding, and every recommendation is a response in that silent dialogue. While there is exciting research happening in the field of explicit conversational recommenders using LLMs , the real power at Netflix's scale lies in perfecting this seamless, implicit interaction.

The foundation model is not the final chapter in this personalization story. Rather, it is the powerful new engine that will likely drive the next decade of innovation. It's the platform that will enable the creation of experiences that are hard to even imagine yet, all in service of the simple, foundational goal that started it all: connecting people to the stories they'll love.