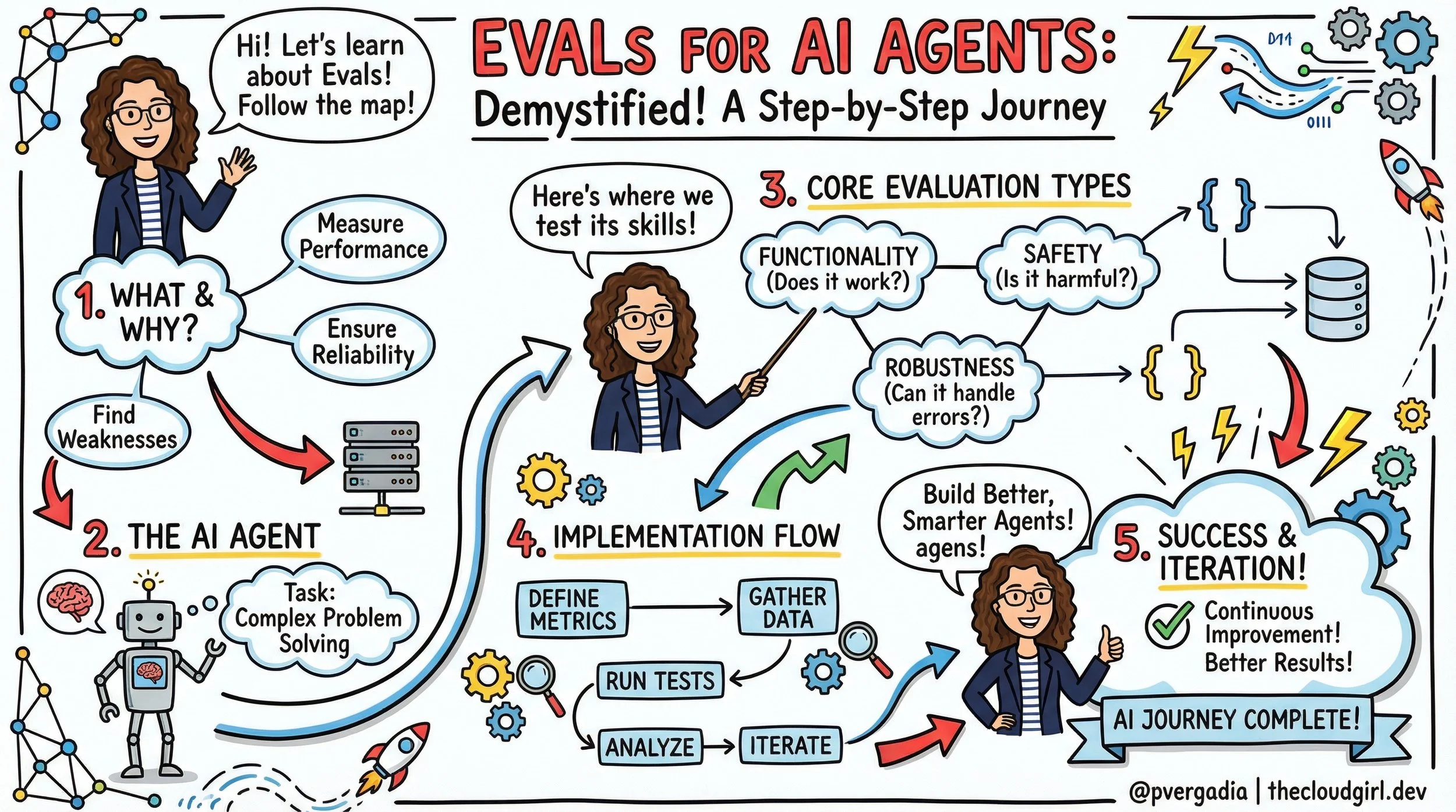

Demystifying Evals: How to Test AI Agents Like a Pro

If you’ve moved from building simple RAG pipelines to autonomous AI agents, you’ve likely hit a wall: evaluation.

With a standard LLM call, you have a prompt and a response. It’s easy to grade. But an agent operates over multiple turns, calls tools, modifies environments, and corrects its own errors. How do you test a system that is non-deterministic and whose "answer" isn't just text, but a side effect in a database or a file system?

Anthropic recently shared their internal playbook on agent evaluation. Here is the developer’s guide to building rigorous, scalable evals for AI agents.

The Flying Blind Problem

When you first build an agent, you probably test it manually ("vibes-based" testing). This works for prototypes but breaks at scale. Without automated evals, you are flying blind. You can't distinguish real regressions from noise, and you can't confidently swap in a new model (like moving from Claude 3.5 Sonnet to a newer version) without weeks of manual re-testing.

The Golden Rule: Start building evals early. They force you to define what "success" actually looks like for your product.

The Anatomy of an Agent Eval

An agent evaluation is more complex than a standard unit test. It generally consists of seven key components:

The Task: The specific scenario or test case (e.g., "Fix this GitHub issue").

The Harness: The infrastructure that sets up the environment and runs the agent loop.

The Agent Loop: The model interacting with tools, reasoning, and the environment.

The Transcript: The full log of tool calls, thoughts, and outputs.

The Outcome: The final state of the environment (e.g., Is the file edited? Is the row in the DB?).

The Grader: The logic that scores the transcript or the outcome.

The Suite: A collection of tasks grouped by capability (e.g., "Refund Handling" suite).

3 Strategies for Grading Agents

You cannot rely on just one type of grader. A robust system uses a "Swiss Army Knife" approach:

1. Code-Based Graders (The "Unit Test")

These are fast, cheap, and deterministic.

Best for: Verifying outcomes.

Examples: Regex matching, static analysis (linting generated code), checking if a file exists, running a unit test against generated code.

Pros: Zero hallucinations, instant feedback.

Cons: Can be brittle; misses nuance.

2. Model-Based Graders (LLM-as-a-Judge)

Using an LLM to grade another LLM.

Best for: Assessing soft skills or reasoning.

Examples: "Did the agent adopt a polite tone?", "Did the agent logically deduce the error before fixing it?"

Pros: flexible; handles open-ended output.

Cons: Non-deterministic; can be expensive; requires calibration.

3. Human Graders (The Gold Standard)

Best for: Calibrating your Model-Based graders and final QA.

Strategy: Use humans to grade a subset of logs, then tune your LLM judge to match the human scores.

Architecting Evals by Agent Type

Different agents require different evaluation architectures.

For Coding Agents

Coding agents are actually the "easiest" to evaluate because code is functional.

The Setup: Give the agent a broken codebase or a feature request.

The Check: Run the actual test suite. If the tests pass, the agent succeeded.

Advanced: Use Transcript Analysis to check how it solved it. Did it burn 50 turns trying to guess a library version? Did it delete a critical config file? (Use an LLM grader to review the diff).

For Browser/GUI Agents

These are tricky because the output is actions on a screen.

Token Efficiency vs. Latency: Extracting the full DOM is accurate but token-heavy. Screenshots are token-efficient but slow.

The Check: Don't just check the final URL. Check the backend state. If the agent "bought a laptop," check the mock database to see if the order exists.

Handling Non-Determinism (pass@k)

Agents are stochastic. Running a test once isn't enough. Anthropic recommends borrowing metrics from code generation research:

pass@1: Did the agent succeed on the first try? (Critical for cost-sensitive tasks).pass@k: If we run the agent $k$ times (e.g., 10 times), what is the probability at least one run succeeds?pass^k: The probability that all $k$ trials succeed. (Use this for regression testing where consistency is paramount).

The Developer's Checklist: How to Start

If you have zero evals today, follow this progression:

Start with "Capability Evals": Pick 5 tasks your agent fails at. Write evals for them. This is your "hill to climb."

Add "Regression Evals": Pick 5 tasks your agent succeeds at. Write evals to ensure you never break them.

Read the Transcripts: Don't just look at the

PASS/FAILboolean. Read the logs. If an agent failed, was the instructions unclear? Did the grader hallucinate?Watch for Saturation: If your agent hits 100% on a suite, that suite is no longer helping you improve capabilities. It has graduated to a pure regression test. You need harder tasks.

Tooling

You don't always need to build from scratch. The ecosystem is maturing:

Harbor: Good for containerized/sandbox environments.

Promptfoo: Excellent for declarative, YAML-based testing.

LangSmith / Braintrust: Great for tracing and online evaluation.

Building agents without evals is like writing code without a compiler. You might feel like you're moving fast, but you'll spend twice as long debugging in production. Start small, verify outcomes, and automate the loop.